Apple Kills Its Plan to Scan Your Photos for CSAM. Here's What's

Por um escritor misterioso

Descrição

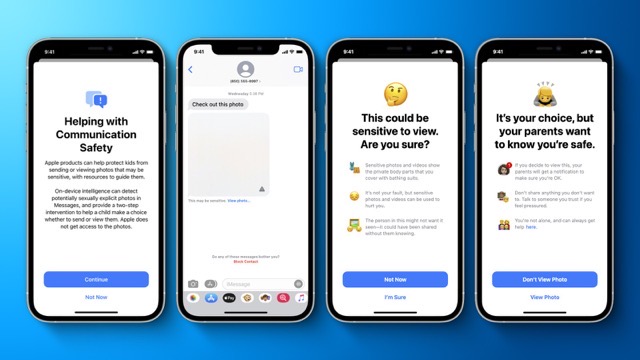

The company plans to expand its Communication Safety features, which aim to disrupt the sharing of child sexual abuse material at the source.

Apple, Microsoft, Facebook forced to come clean on child abuse

WhatsApp CEO, EFF, others say Apple's child safety protocols break

Apple Removes References to CSAM Scanning Feature From Child

iPhone to detect and report child sexual abuse images: Why

iPhone - Wikipedia

Apple AirTag Bug Enables 'Good Samaritan' Attack – Krebs on Security

Apple's Decision to Kill Its CSAM Photo-Scanning Tool Sparks Fresh

Google's Child Abuse Detection Tools Can Also Identify Illegal

/cdn.vox-cdn.com/uploads/chorus_asset/file/11477051/acastro_180604_1777_apple_wwdc_0003.jpg)

Apple drops controversial plans for child sexual abuse imagery

de

por adulto (o preço varia de acordo com o tamanho do grupo)