8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Descrição

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

Learn JAX in 2023: Part 2 - grad, jit, vmap, and pmap

20 Best Parallel Computing Books of All Time - BookAuthority

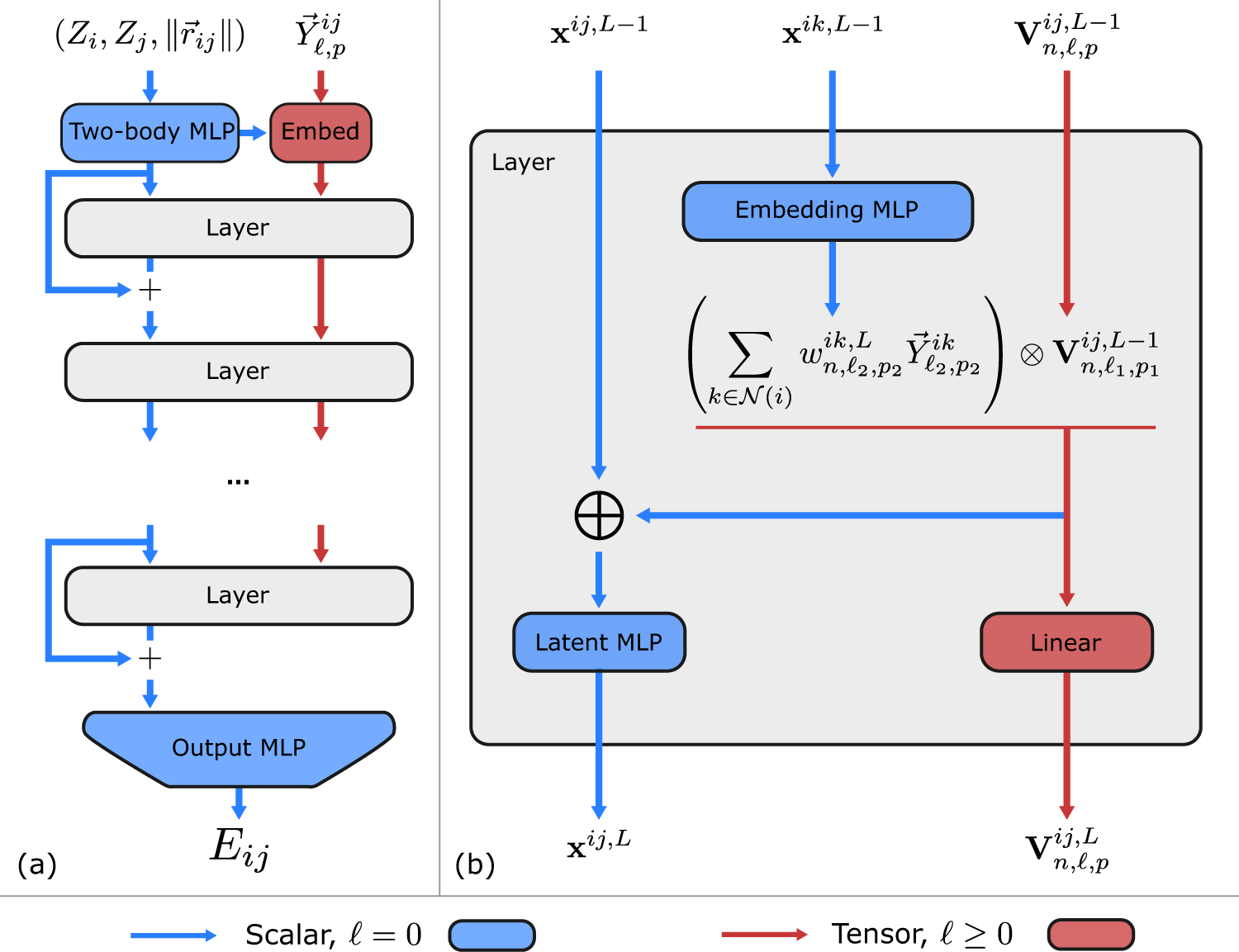

Learning local equivariant representations for large-scale

Using JAX to accelerate our research - Google DeepMind

Scaling Language Model Training to a Trillion Parameters Using

Learning JAX in 2023: Part 1 — The Ultimate Guide to Accelerating

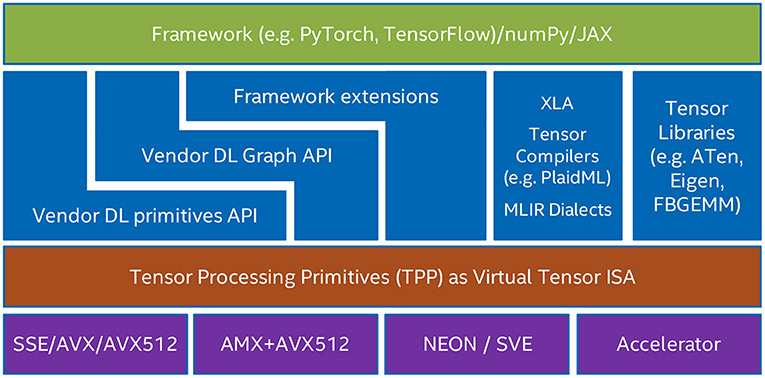

Frontiers Tensor Processing Primitives: A Programming

OpenXLA is available now to accelerate and simplify machine

Scaling Language Model Training to a Trillion Parameters Using

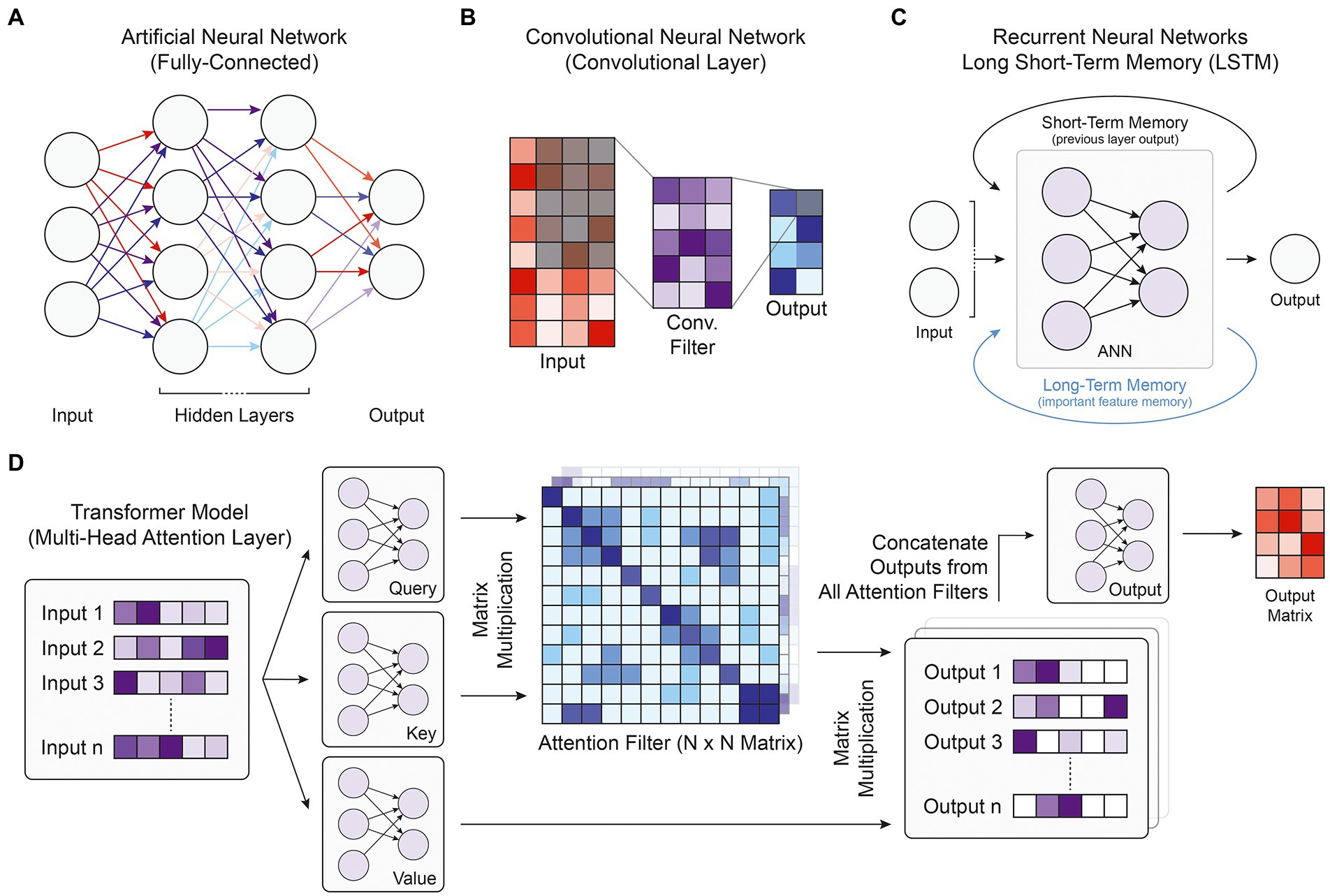

Frontiers Deep learning approaches for noncoding variant

Using Cloud TPU Multislice to scale AI workloads

Efficiently Scale LLM Training Across a Large GPU Cluster with

de

por adulto (o preço varia de acordo com o tamanho do grupo)

/i.s3.glbimg.com/v1/AUTH_08fbf48bc0524877943fe86e43087e7a/internal_photos/bs/2021/6/N/zG2d07TgAkRYGBAH9FxA/2016-02-23-24.png)