nonlinear optimization - Do we need steepest descent methods, when minimizing quadratic functions? - Mathematics Stack Exchange

Por um escritor misterioso

Descrição

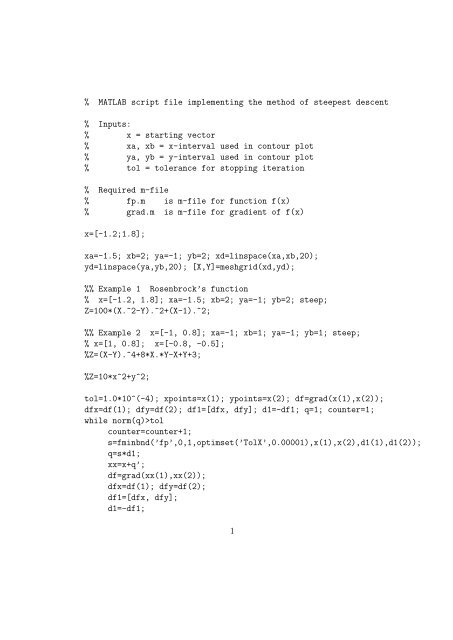

I'm studying about nonlinear programming and steepest descent methods for quadratic multivariable functions. I have a question highlighted in the following picture:

My question is: If we can expli

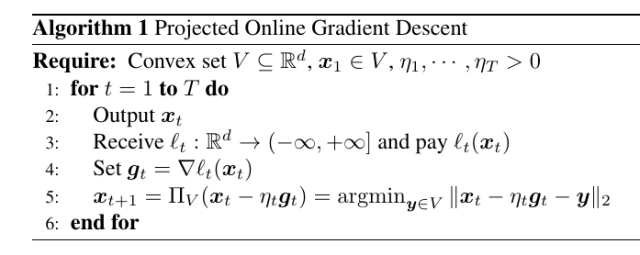

gradient descent - Exact line search - Boyd Convex Optimization exercise - How do I derive this? - Mathematics Stack Exchange

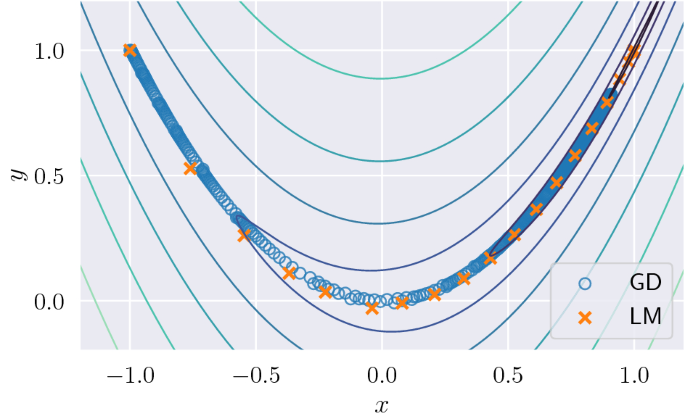

Majorization-minimization-based Levenberg–Marquardt method for constrained nonlinear least squares

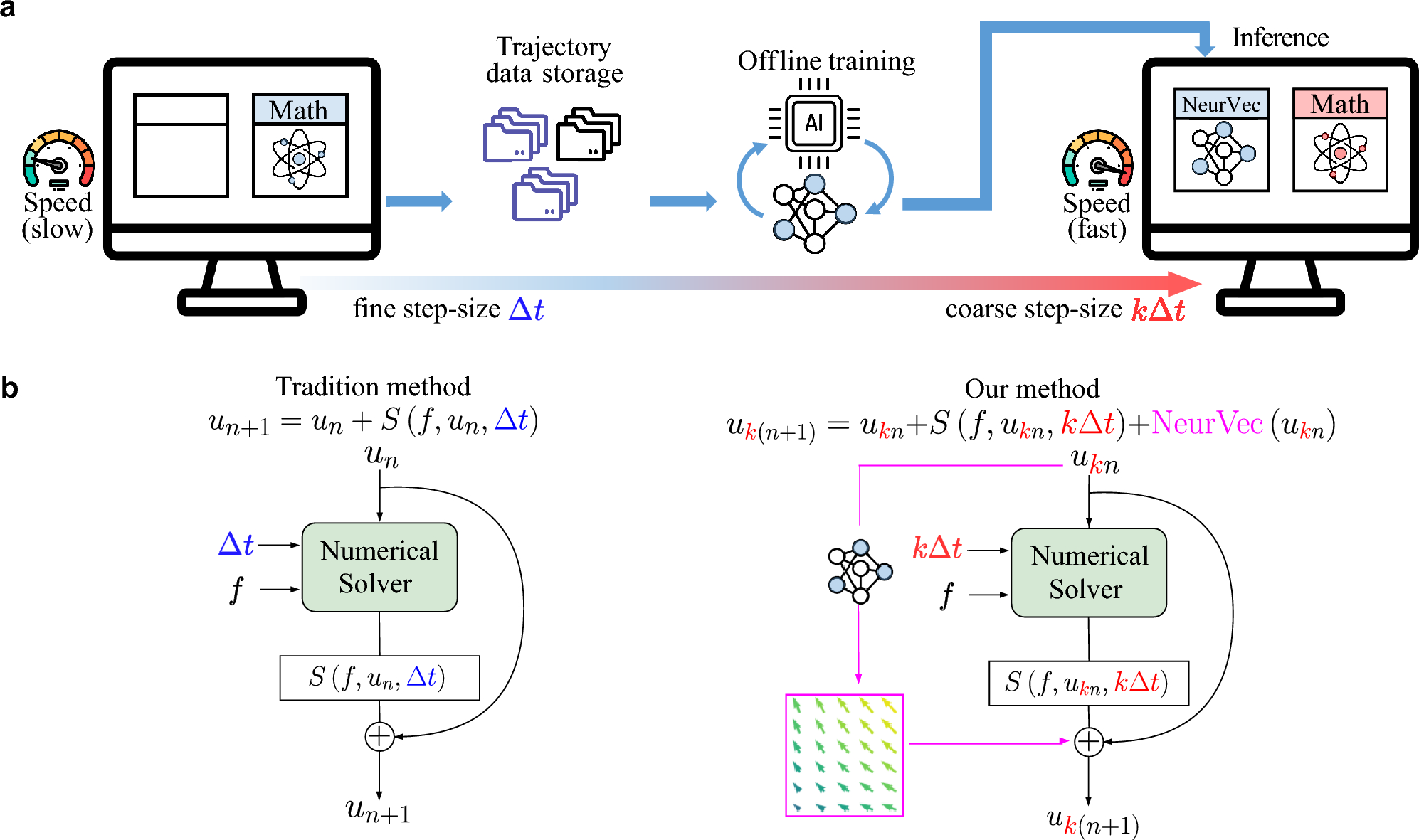

On fast simulation of dynamical system with neural vector enhanced numerical solver

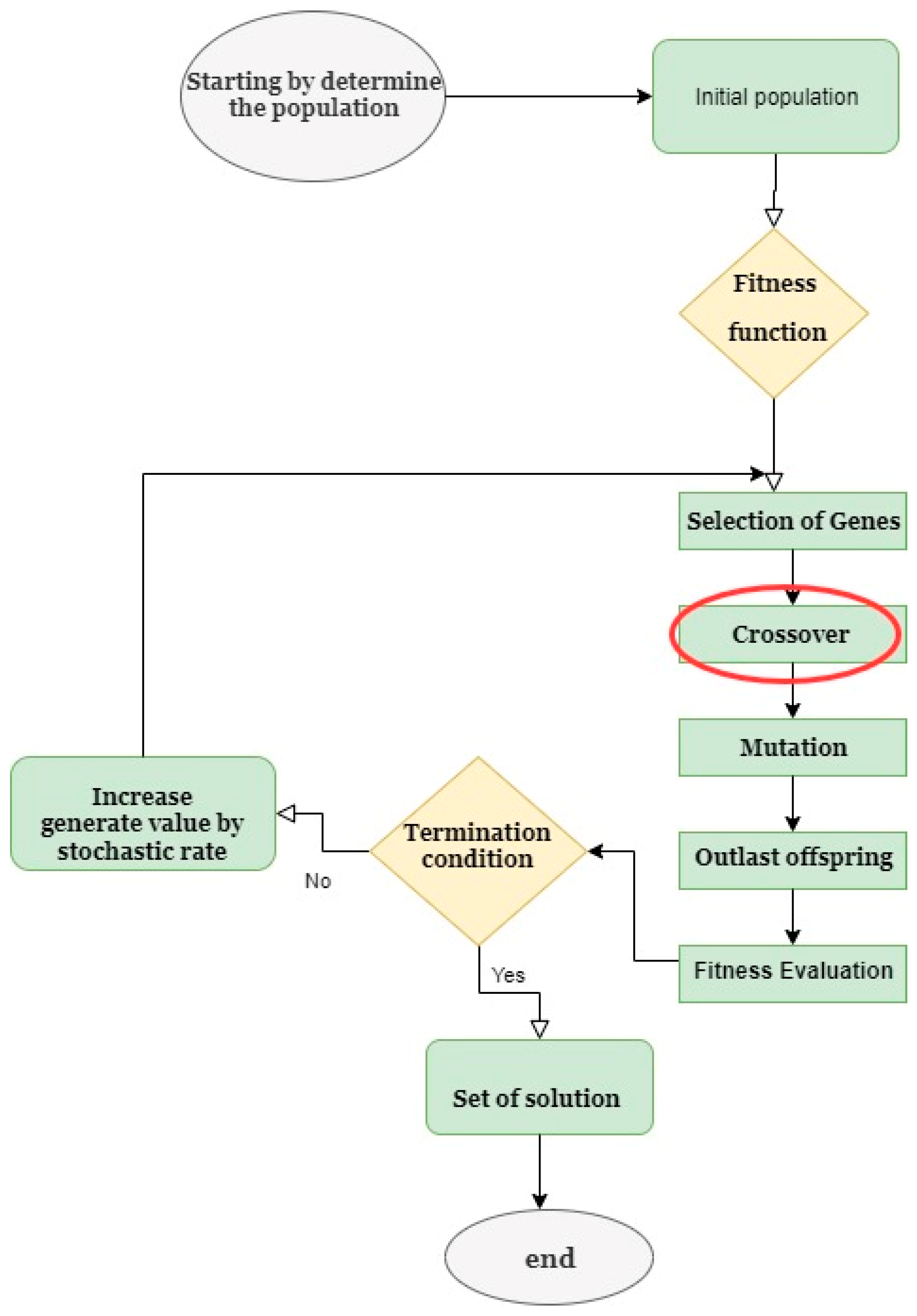

Systems, Free Full-Text

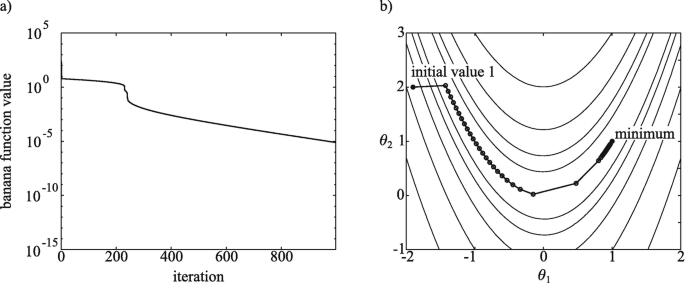

Nonlinear Local Optimization

Mathematical optimization - Wikipedia

calculus - Minimizing a quadratic function subject to quadratic constraints - Mathematics Stack Exchange

taylor expansion - Newton's Method vs Gradient Descent? - Mathematics Stack Exchange

optimization - Minimize pure quadratic subject to linear equality constraint - Mathematics Stack Exchange

Mathematics, Free Full-Text

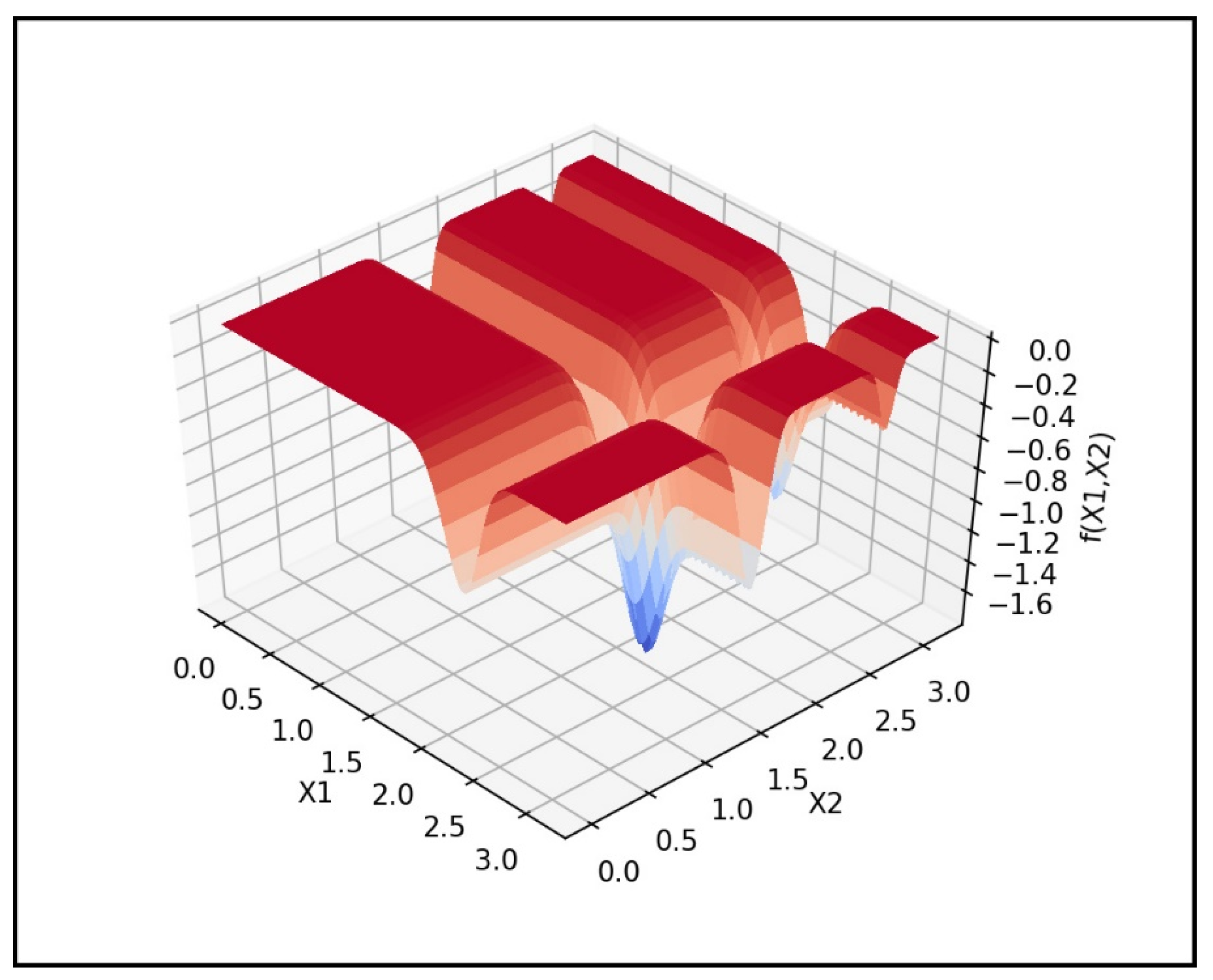

optimization - How to minimize a function? - Mathematics Stack Exchange

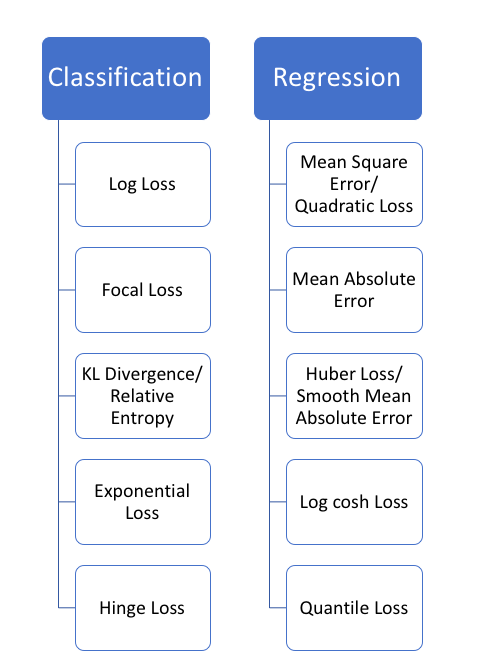

5 Regression Loss Functions All Machine Learners Should Know, by Prince Grover

calculus - First-order necessary condition for relative minimum point - Mathematics Stack Exchange

de

por adulto (o preço varia de acordo com o tamanho do grupo)