Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

Por um escritor misterioso

Descrição

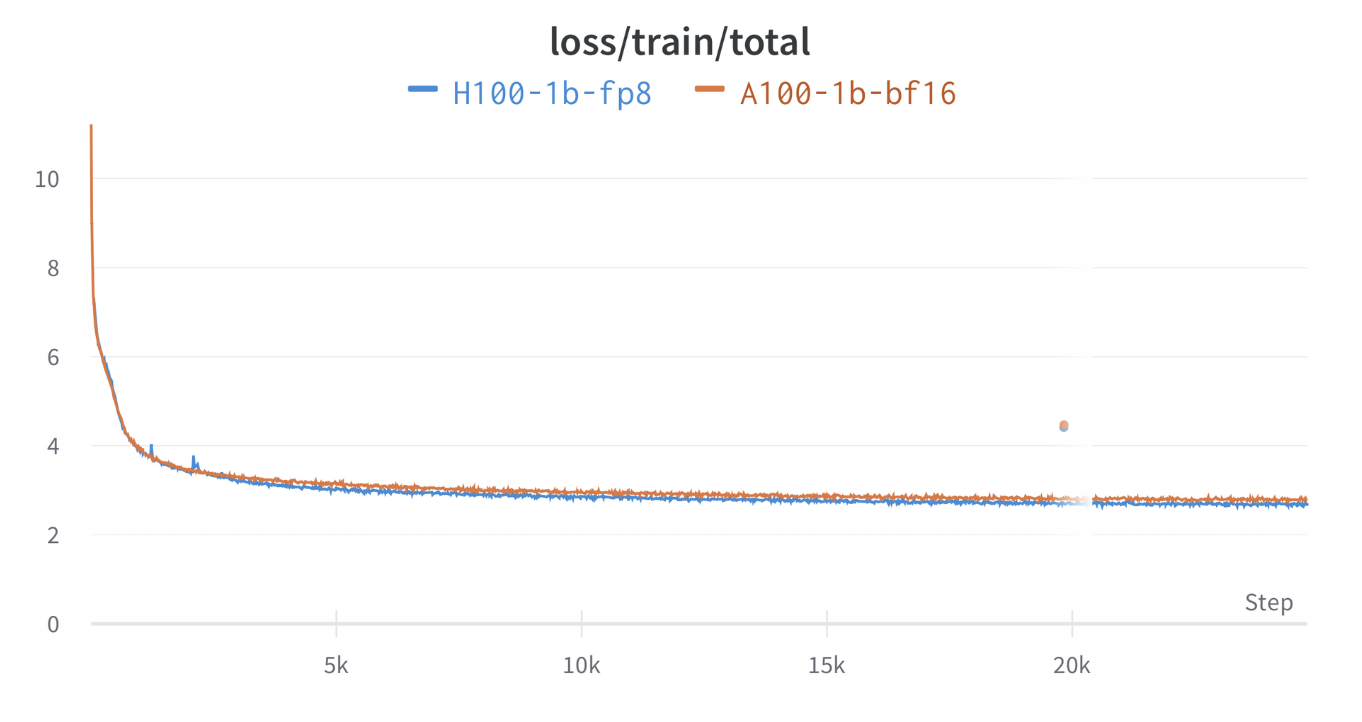

Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

Nvidia Leads, Habana Challenges on MLPerf GPT-3 Benchmark - EE Times

Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave (Part 1)

%20copy.png)

In The News — CoreWeave

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

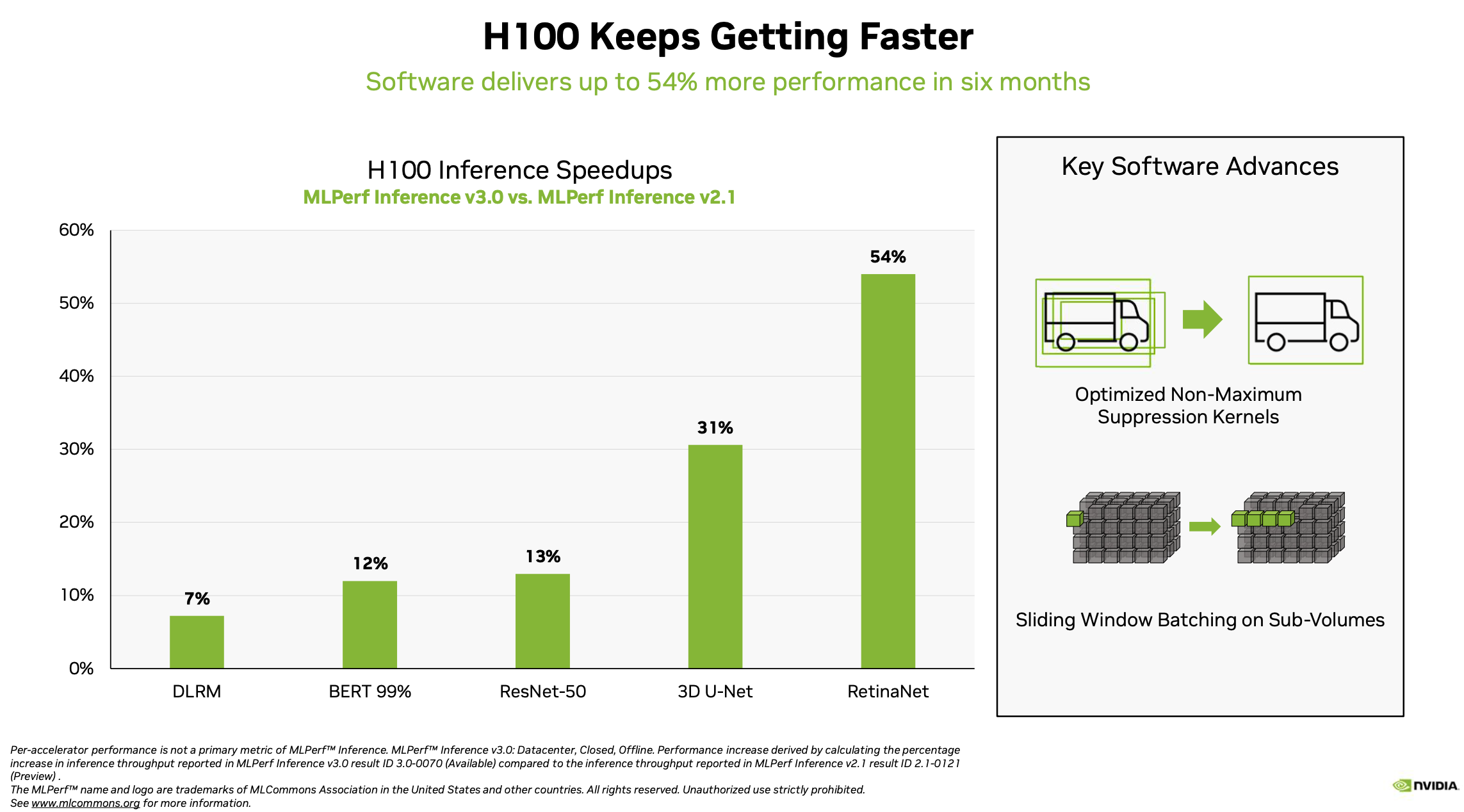

Full-Stack Innovation Fuels Highest MLPerf Inference 2.1 Results for NVIDIA

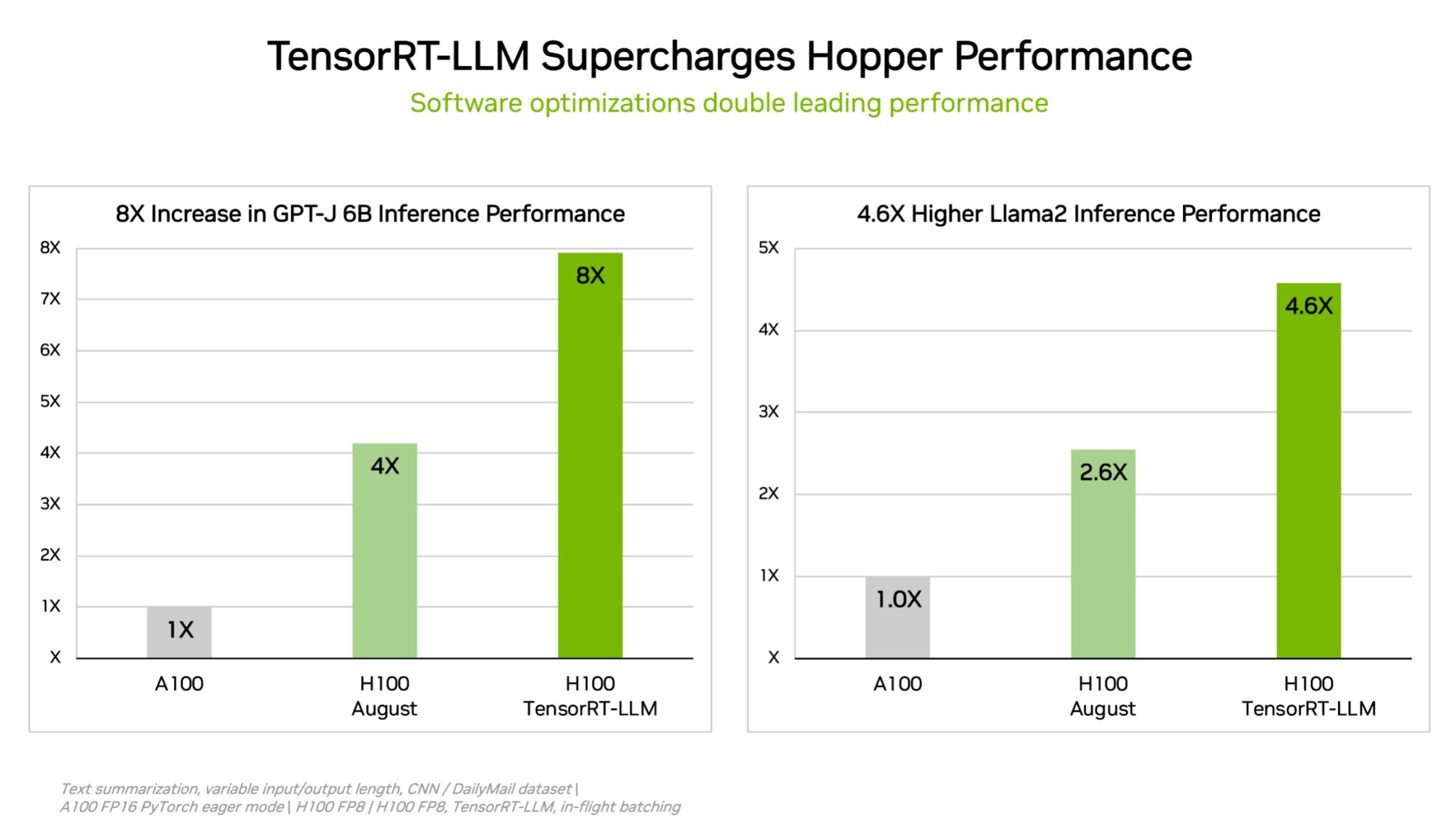

NVIDIA TensorRT-LLM Enhancements Deliver Massive Large Language Model Speedups on NVIDIA H200

NVIDIA Hopper Architecture In-Depth

NVIDIA H100 GPUs Dominate MLPerf's Generative AI Benchmark

Achieving Top Inference Performance with the NVIDIA H100 Tensor Core GPU and NVIDIA TensorRT-LLM

OGAWA, Tadashi on X: => Benchmarking Large Language Models on NVIDIA H100 GPUs with CoreWeave, Part 1. Apr 27, 2023 H100 vs A100 BF16: 3.2x Bandwidth: 1.6x GPT training BF16: 2.2x (

GPUs NVIDIA H100 definem padrão para IA generativa no primeiro benchmark MLPerf

NVIDIA's H100 GPUs & The AI Frenzy; a Rundown of Current Situation

Efficiently Scale LLM Training Across a Large GPU Cluster with Alpa and Ray

de

por adulto (o preço varia de acordo com o tamanho do grupo)